When Agents Talk to Agents: Reflections from Austin LangChain Office Hours

When Agents Talk to Agents: Reflections from Austin LangChain Office Hours

Yesterday I hosted our regular Austin LangChain office hours, and I’m still processing the wealth of ideas that emerged from just 68 minutes of conversation. It’s these kinds of discussions that remind me why I left the corporate world to focus on building in this space.

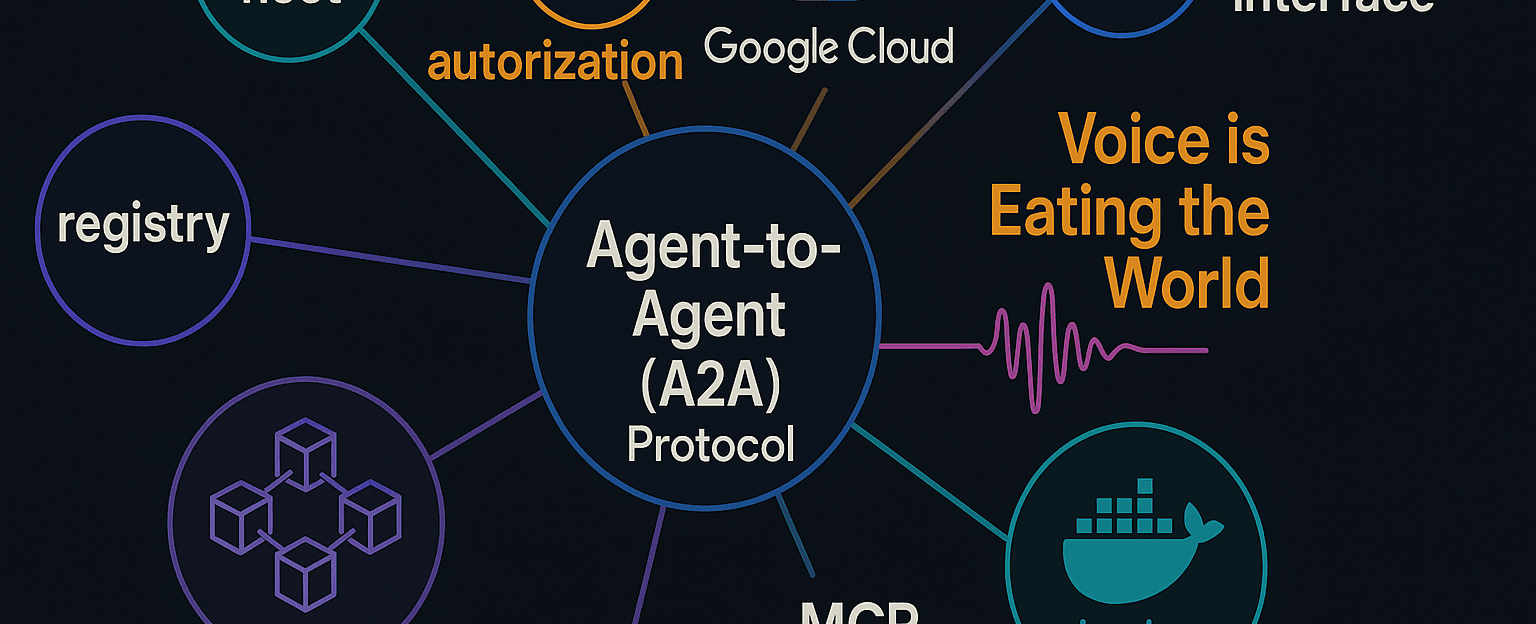

The A2A Protocol: Beyond Tool Mounting

One thread that particularly excited me was our exploration of the Agent-to-Agent (A2A) protocol. As we’ve seen MCPs evolve, they’ve given us the ability to mount tools or expose agents as tools for other agents to use. The A2A protocol takes this a step further.

I described it to the group as “almost like a service registration for AI microservices.” The potential here is enormous: imagine a horizontally scalable registry where agents advertise their capabilities and connection methods, both within your domain and across domains.

I’m meeting with Google Cloud tomorrow to discuss their implementation, but I’m already envisioning practical applications. What if, instead of emailing someone, you could interact directly with their agent fleet? With proper authorization controls, of course.

As I put it during the discussion: “I can clearly see within a number of months that I expect to have just a fleet of agents, and I expect others to have a fleet of agents. Why email the person if I can just interact with their agents?”

Voice Is Eating The World

Another fascinating thread was our discussion of voice interfaces for AI workflows. I shared my experience using Mac Whisper for voice-to-text transcription:

“I can speak at 212 words a minute and I can type between 60 and 80 if I’m really going for it. So I love transcribing to my AI coder.”

This efficiency gain has transformed my workflow, especially for my brand development work at Always Cool Brands. I’m now creating entire websites and marketing copy just by having conversations and distilling coffee chats - no typing required.

Karim shared his experience with FastRTC, which dramatically reduces the boilerplate code needed for voice applications. We explored the possibility of creating a unified voice server that works across web, mobile, and telephone interfaces.

The group debated building native iOS apps versus web-based solutions, with Progressive Web Apps emerging as an interesting middle ground. I’m particularly intrigued by the idea of voice-enabled agents that can operate while you’re doing other activities - coding while walking the dog might soon be a reality.

Docker’s MCP Play: Revolutionary or Just Staying Relevant?

The group had some healthy skepticism about Docker’s new MCP registry. Having been close to Docker during its early days (I turned down an opportunity to join the team that became the networking portion of Swarm), I’ve watched its evolution with interest.

As I noted during our discussion, Docker was “brilliant at completely changing how we dealt with Linux-based applications” but then “the corporatization happened” and “it became this really hyper-political organization.”

Their MCP announcements remind me of “a Broadcom VMware announcement or a Cisco announcement” - product managers with a second-market strategy, having lots of meetings and approvals.

Karim suggested Docker’s moves feel “bolted on rather than baked in” and proposed that a server-sent events (SSE) version of MCP would offer better scalability and security. The group consensus was that Docker’s approach doesn’t solve the real challenges for Kubernetes environments.

LangChain and MCPs: Coexistence, Not Competition

We spent some time discussing whether MCPs pose a threat to LangChain. The consensus was that they’re complementary rather than competitive. MCPs are primarily being used as plugins for cloud/desktop environments, while LangChain excels at building comprehensive agent-based workflows.

As Karim aptly put it, MCPs are expanding the tool ecosystem rather than replacing existing frameworks. This gives us more options for building agent-based workflows and creates new opportunities for rapid prototyping.

Looking Forward

These office hours continue to be one of the highlights of my week - a chance to connect with brilliant minds and explore emerging ideas in the AI middleware space. I’m particularly excited about:

- Exploring practical A2A demonstrations

- Further developing voice-enabled agents

- Seeing how the LangChain ecosystem evolves alongside MCPs

If you’re interested in joining our next session (Tuesday, April 29th at 2pm Central), drop me a message. These conversations are open to anyone interested in the future of AI middleware, regardless of technical background.

Until next time, Colin

Colin McNamara is the founder of Always Cool AI and organizer of the Austin LangChain AI Middleware Users Group (AIMUG). After leaving Oracle to start his own business, he focuses on AI integration patterns and agent-based workflows.

Share

Newsletter

Related Posts

Quick Links

Legal Stuff