Exploring the Future of Agent Infrastructure: Austin LangChain Office Hours Insights

Exploring the Future of Agent Infrastructure: Austin LangChain Office Hours Insights

Yesterday, we had another mind-expanding session during the Austin LangChain Office Hours. What started as a casual discussion about graph database memory integration quickly evolved into a deep dive on agent architecture, microservices, and the future of tools infrastructure. I want to share some key insights from our discussion that might help shape how we all think about building robust agent ecosystems.

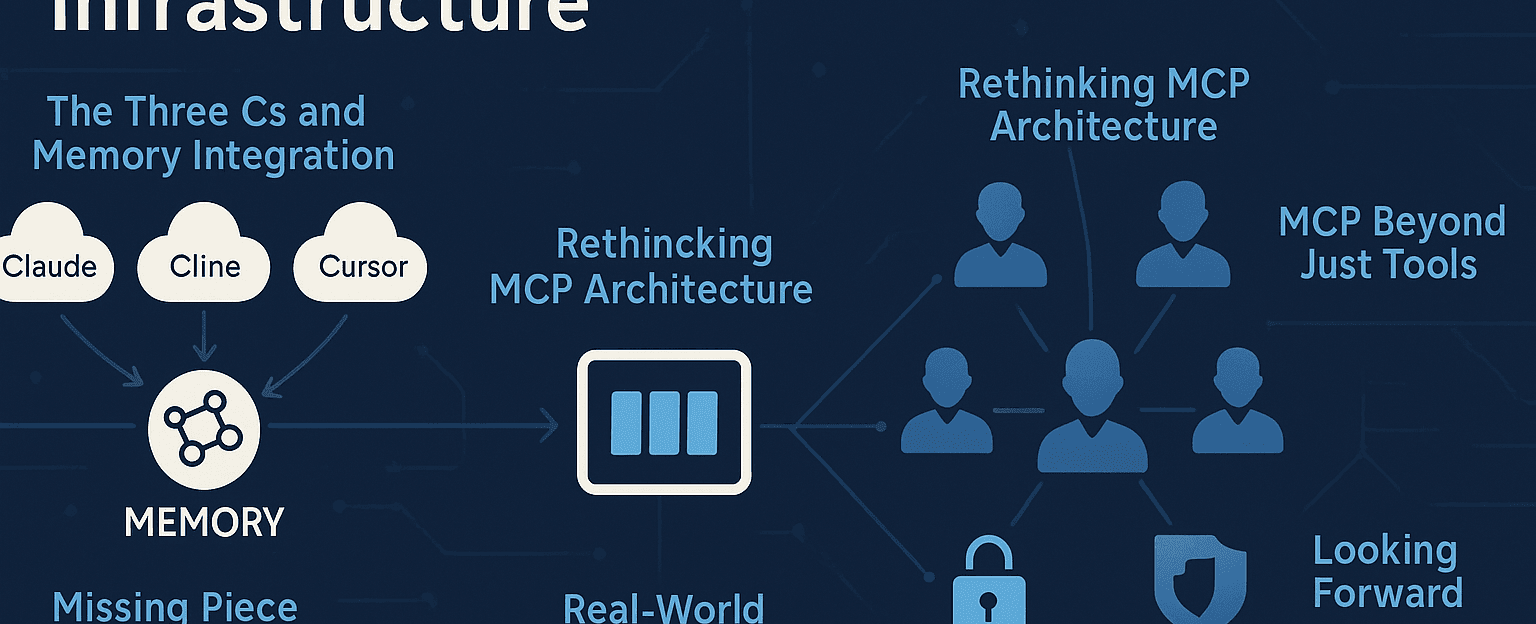

The “Three Cs” and Memory Integration

I kicked off the meeting sharing my plans to get a graph database memory MCP up and running with Claude, Cline, and Cursor - or what I’ve been calling “the three Cs” of my life. There’s something fascinating about integrating memory stores across different models and interfaces, though it does mean running a Docker container persistently. Is that overkill? Maybe, but sometimes you need to overengineer to learn what actually works.

Rethinking MCP Architecture: From Local to Networked

A revelation came during our discussion with Karim about the Model Context Protocol (MCP). While most of us have been implementing MCP locally using standard IO, Karim demonstrated how easily we can run it as a server-side event (SSE) endpoint using UVICORN. This fundamentally changes how we think about deploying and accessing our tools.

What I’m now envisioning is a complete microservices layer where all MCPs are accessible over the network rather than just running locally. This creates some compelling possibilities:

- Smaller, more focused codebases

- Better management of tools via service registry

- Centralized logging and observability

- Security segregation based on access level

- Service advertisement for agents and swarms

- Integration of heterogeneous agents (not just LangGraph)

As a network engineer at heart, I can’t help but think about “gluing shit together and calling it a career.” There’s something beautiful about creating a capability matrix of addressable tools and agents accessible via standard protocols.

MCP Beyond Just Tools

One eye-opening moment was realizing that MCP is about more than just tools. As Karim explained, it supports:

- Hard-coded resources

- Parameterized templates

- Prompts that can be returned to agents

- Resources addressable via schema://resourcename

This opens up interesting authentication possibilities too. Since MCP can be run as a FastAPI service, we could implement role-based access control (RBAC) where resources and functions are selectively exposed based on user authentication. Imagine an executive admin who has read access across an executive team’s calendars but write access only for the CEO and CFO - all elegantly managed through MCP authentication.

The Tooling Gap in A2A Interactions

Karim made an astute observation about agent-to-agent (A2A) implementations: there’s simply too much boilerplate. While the generative AI landscape has seen tooling dramatically reduce barriers to entry, A2A implementations haven’t kept pace.

I’ve spent my career dealing with “bubble gum and bailing wire” solutions to get early implementations running, but better tooling is essential for widespread adoption. Joseph showed us Lindy, a WYSIWYG agent framework that’s reducing these barriers with its browser-based approach.

The Missing Piece: Logging and Observability

Perhaps the most critical gap in our current agent ecosystem is comprehensive logging and traceability. When agents spawn MCPs and tools execute actions, how do we maintain visibility across the stack?

I’m particularly interested in:

- Granular logging at the tool level

- Mapping tool usage back to specific agent sessions

- Creating comprehensive audit trails for governance

- Ensuring visibility from agent inputs through middleware to tool executions

With agents across multiple frameworks (LangGraph, Lindy, etc.), we need an open protocol approach to logging. Open Telemetry integration with visualization tools like LangSmith or Lang Fuse seems promising, but we need to ensure consistency across heterogeneous agent ecosystems.

Real-World Applications: Agents for Business Process Automation

Joseph shared some exciting potential applications, including using agents for payroll processing. We discussed implementing a human-in-the-loop control before executing payroll to ensure security and compliance.

I suggested using an open-source ERP system like Odoo, connecting it via LangGraph with a human approval loop, and even adding physical check printing as a “flex” for demonstrations. Add voice AI on top of that, and you’ve got an impressive showcase of agent capabilities.

Looking Forward

There’s something profound happening in this space. As we create more sophisticated agent architectures with proper security, logging, and governance, we’re moving toward systems that can safely automate increasingly complex workflows.

Our discussion reminded me that those of us pivoting early into this space are in a powerful position. While many tech workers are struggling in the current market, AI and agent technologies are booming. The key is continuous reskilling - learning the new stuff before the younger, cheaper talent makes you obsolete.

We’ve planned more discussions for our upcoming meetings, including a lightning talk from Joseph on tool survey findings and a deeper look at the recently released Qwen 3. If you’re in Austin and interested in these topics, join us for our weekly office hours. The future is being built, conversation by conversation.

What agent infrastructure challenges are you facing? Drop your thoughts in the comments below, and let’s continue the discussion.

Newsletter

Quick Links

Legal Stuff